Testing local AWS Lambda functions against a private AWS RDS instance

Introduction

Hello! New blog, first post!

I work for an I.T. provider that's helping a customer with a transition to an AWS-hosted cloud computing environment. There's a lot of moving pieces, but one of the pieces of AWS tech that my team like to use are AWS Lambda functions.

In particular, the SAM CLI tooling is extremely nice for fast development and feedback. I feel like I'll have more things to say about that, as there's a lot to talk about!

Workflow

So, my usual workflow when working on a Python-based lambda function, is to write up my initial code in my editor (VS Code, but I try to keep a Neovim config roughly equivalent in functionality for when I really want to just bury myself in a terminal all day long), then use SAM CLI to get it uploaded into a development AWS account, then I go create some test events in the Lambda Console, and see what happens.

It's pretty nice, not too much effort to switch around an editor and a browser, and because my functions usually need to talk to a locked-down AWS RDS instance that has no public internet connectivity, I assumed it was going to be the standard way I'd go about testing and developing.

Becoming more productive

Turns out, there's some functionality included in the AWS CLI that can really speed things up. Yep, there's a way to make that private RDS instance contactable from my development PC :) It uses the Session Manager to set up a Remote Port Forwarding session from an EC2 instance to the RDS instance, and like most things where this fancy networking stuff happens, it feels like magic. So let's take a deeper look at how this works ..

disclaimer: this is going to include some discussion about Docker, the internals of which I am not fully familiar with, and also sometimes feels like magic, but hopefully everything should work out if you want to try this yourself

The problem

Before I continue, let me clarify the problem I'm looking to solve here. I want to be able to use sam local invoke to trigger a local run of my lambda function inside of Docker. However, my lambda function needs to talk to a database that's not listening on the open internet for access.

The traditional way around this, at a guess, would be that I would run my own local database, with either a full or cut-down copy of data from the AWS development database server. And that's probably not a bad idea for a lot of cases. However, this is a complex database that I do not personally have much knowledge about, so I like to leave the database team alone and just use what they have provisioned for us in AWS Dev.

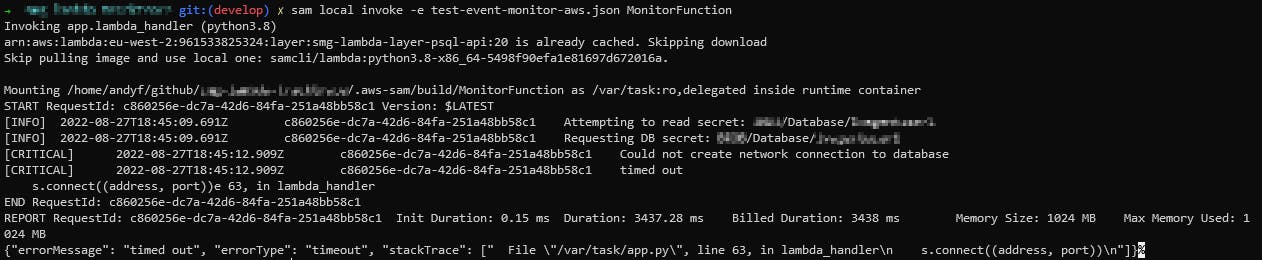

So, I need to connect into that AWS RDS ... Right now, this is what happens if I invoke my lambda using the exact same event that I would use in the AWS console:

As you would expect, we simply cannot talk to the database, so the lambda terminates.

The solution

Let's create a Port Forwarding session using the AWS CLI!

~ aws ssm start-session \

--region eu-west-2 \

--target i-05c294fb4b9f96990 \

--document-name AWS-StartPortForwardingSessionToRemoteHost \

--parameters host="my-database.eu-west-2.rds.amazonaws.com",portNumber="5432",localPortNumber="5432"

For this example, i-05c294fb4b9f96990 is a little Linux EC2 instance that has connectivity to the RDS database, so I'll use it as my remote jump-in box. I then pass along the parameters of my RDS instance (you can find these from the RDS console!) - the host and port. 5432 is a bit of a giveaway that we're talking to an (Aurora) Postgres instance!

Starting session with SessionId: andy.fogg@blah

Port 5432 opened for sessionId andy.fogg@blah.

And here's our session up and running. To confirm it works, we can do a couple of quick things

Poke it with a simple network test: </dev/tcp/localhost/5432

Connection accepted for session andy.fogg@blah

Use psql to do a full connection test:

~ psql "postgres://${sql_user}:${sql_pass}@localhost:5432/mydb"

psql (12.11 (Ubuntu 12.11-0ubuntu0.20.04.1), server 12.8)

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, bits: 256, compression: off)

Type "help" for help.

mydb=>

Looks good! Now let's figure out how we can use this with SAM invoke...

Trial and error

My lambda function accepts a JSON event, inside of which I pass along the name of an AWS Secret to go and read database credentials from.

The first thing I tried was simply making a copy of this secret, and changing the 'host' value from the real RDS address to simply 'localhost'.

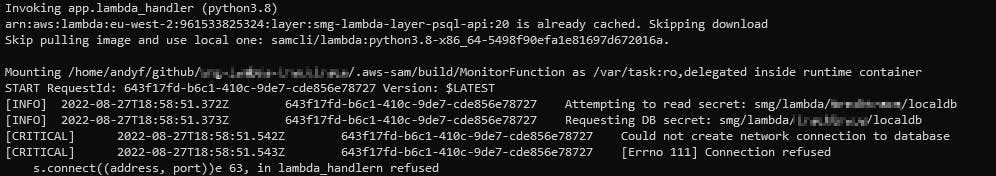

Hm! It didn't like that .. that's because localhost in the docker container where the lambda is running is not the localhost that's running my port-forwarding command!

Now this is where it got a bit fiddly. I wasn't sure how Docker networking between a container and host worked, so I tried a few different things here.

First, I needed to know what was happening inside the container, so I spun up a copy of the base docker ubuntu image, installed ping, ip, and curl inside of it, then took a look at the output of ip a to see what the container networking looked like.

root@32e0eb65ba3e:/# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: sit0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/sit 0.0.0.0 brd 0.0.0.0

50: eth0@if51: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

root@32e0eb65ba3e:/#

I noticed 172.17.0.2 was probably the IP docker had assigned my container, so what might be on 172.17.0.1?

root@32e0eb65ba3e:/# ping 172.17.0.1

PING 172.17.0.1 (172.17.0.1) 56(84) bytes of data.

64 bytes from 172.17.0.1: icmp_seq=1 ttl=64 time=0.079 ms

64 bytes from 172.17.0.1: icmp_seq=2 ttl=64 time=0.040 ms

^C

--- 172.17.0.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1005ms

rtt min/avg/max/mdev = 0.040/0.059/0.079/0.019 ms

That looks promising! Let's try and socket test the port-forwarded database ..

root@32e0eb65ba3e:/# </dev/tcp/172.17.0.1/5432

bash: connect: Connection refused

bash: /dev/tcp/172.17.0.1/5432: Connection refused

Oh! that's unfortunate. I wasn't really sure why this didn't work, but I suppose it doesn't help that I am running Windows 10, with an Ubuntu WSL2 session, and then Docker jammed in between them. There are layers of virtualisation going on here that confuse and scare me!

Ok new idea. Let's spin up the container but this time on the 'host' network and not the 'bridge' network, which it seems to connect to by default.

root@docker-desktop:/# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 brd 127.255.255.255 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: sit0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/sit 0.0.0.0 brd 0.0.0.0

4: services1@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether fa:33:0b:d1:74:a3 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.65.4 peer 192.168.65.5/32 scope global services1

valid_lft forever preferred_lft forever

inet6 fe80::f833:bff:fed1:74a3/64 scope link

valid_lft forever preferred_lft forever

6: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 1000

link/ether 02:50:00:00:00:01 brd ff:ff:ff:ff:ff:ff

inet 192.168.65.3/24 brd 192.168.65.15 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::50:ff:fe00:1/64 scope link

valid_lft forever preferred_lft forever

7: br-26798b49d31e: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:85:0e:8b:90 brd ff:ff:ff:ff:ff:ff

inet 172.19.0.1/16 brd 172.19.255.255 scope global br-26798b49d31e

valid_lft forever preferred_lft forever

8: br-d4e96f963680: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:61:12:48:5f brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-d4e96f963680

valid_lft forever preferred_lft forever

9: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:90:f9:55:b7 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:90ff:fef9:55b7/64 scope link

valid_lft forever preferred_lft forever

Oh there's a lot more here! Some of this doesn't look useful but ... there's a new IP there that looks interesting, sitting on eth0 (which I believe is the default network interface name in many places) - 192.168.65.3

root@docker-desktop:/# </dev/tcp/192.168.65.1/5432

^C

root@docker-desktop:/# </dev/tcp/192.168.65.2/5432

root@docker-desktop:/# </dev/tcp/192.168.65.3/5432

bash: connect: Connection refused

bash: /dev/tcp/192.168.65.3/5432: Connection refused

root@docker-desktop:/# </dev/tcp/192.168.65.4/5432

bash: connect: Connection refused

bash: /dev/tcp/192.168.65.4/5432: Connection refused

Ok, trying against 65.1 caused a hang - have to Ctrl-C out of that, that's basically just trying to connect to something forever which is not answering (and also not refusing the connection).

65.2 returned instantly, so that's a connectable socket ... we'll come back to that.

65.3 and 65.4 both refused, which I guess makes sense as those are IP's apparently used purely by this container, which doesn't run postgres :)

So! At this point, I tried to use SAM to use the 'host' network instead of 'bridge':

Timed out while attempting to establish a connection to the container. You can increase this timeout by setting the SAM_CLI_CONTAINER_CONNECTION_TIMEOUT environment variable. The current timeout is 20.0 (seconds).

Oh no! Why was this happening? I wasn't sure. Something to come back to at another time!

BUT I did then run my ubuntu docker image on the standard bridge network, and 192.168.65.2 was still connecting !

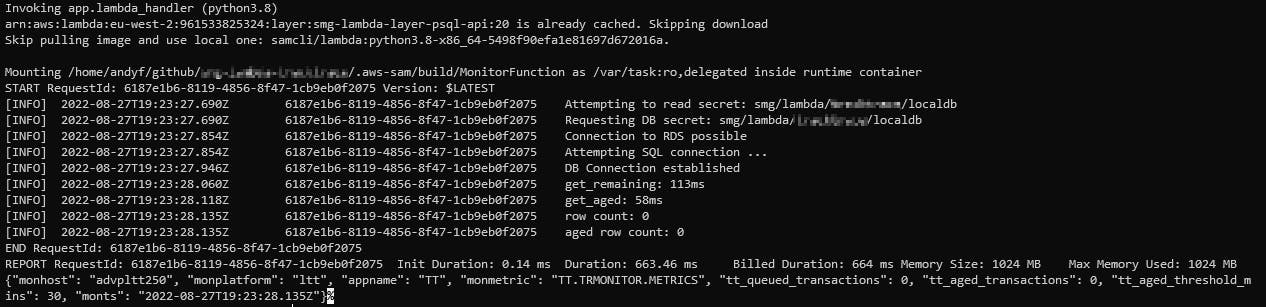

Let's go modify my test secret and swap out localhost for 192.168.65.2 and see what happens:

Success!

Wrapping up

It took a little bit of fiddling around understanding Docker networking, which isn't great and definitely could get people confused, but this was a nice proof of concept that I can infact have my lambda functions, invoking locally, talk directly to an AWS Database that would otherwise be inaccessible over the internet.

I think a lot of the connection issues came from my own slightly unconventional setup. Windows 10 and WSL2 already involves levels of networking magic to make everything work, so trying to also stuff in port forwarding and docker containers was just asking too much for it to 'just work'. If I were on a Linux or macOS box, it may well have worked with a few less steps.

Anyway, I'm very happy with this, and I look forward to writing more posts as I continue learning about how AWS and SAM CLI make my development easier and more efficient!